Firstly, happy new year! And now moving to the cool buzz word – “GenAI”!

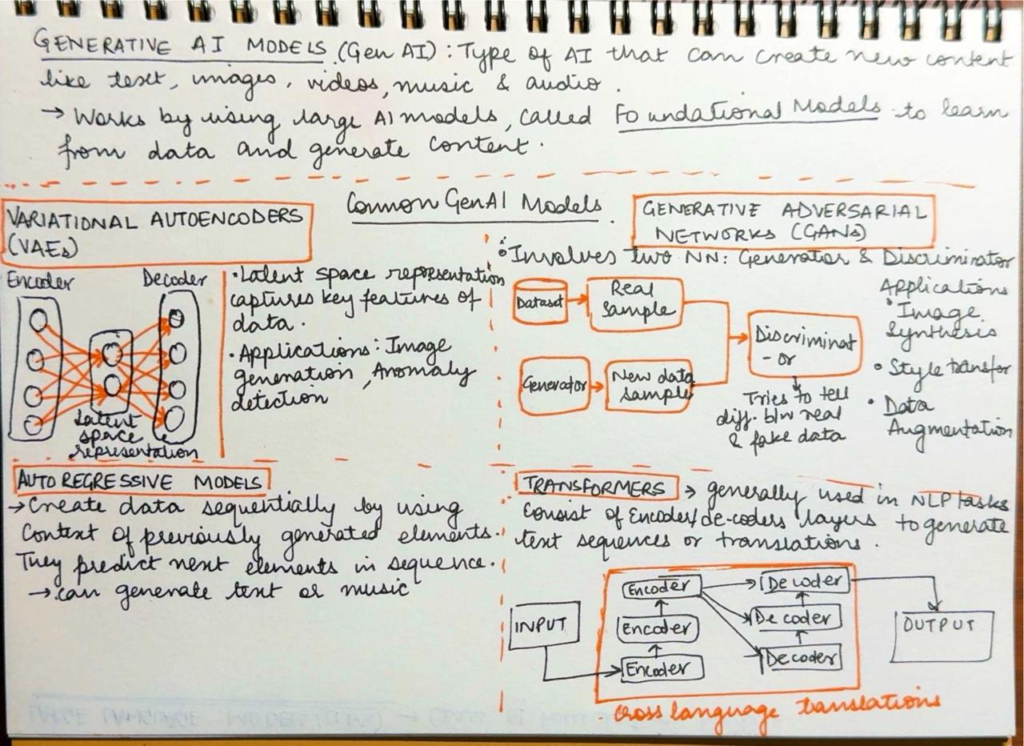

Generative AI (GenAI) is a type of AI that can create new content like text, images, videos, audio, music. It works by using large AI models called ‘Foundational Models’ to learn from the data and generate content.

So, if you have heard or used ‘ChatGPT’, Google’s Gemini etc, you already have interacted with GenAI. 🙂

So a generative AI model can be Unimodal or Multimodal.

There are multiple GenAI models. Each model has a different type of processing and use.

- Variational Autoencoders (VAEs) – Combines the principals of neural networks and probabilistic graphical models.

- Uses the concept of Encoders, Latent space representation and Decoders.

- Encoders map the input data to a lower dimensional latent space representation.

- Decoder then reconstructs the original input data from latent representation.

- The latent space representation captures the key features of data and sends to decoders

- Applications: Image generation, Anomaly detection, Data imputation, semi-supervised learning like improving task performance with limited labeled data.

- Uses the concept of Encoders, Latent space representation and Decoders.

- Generative Adversarial Networks (GANs) – Designed to generate new, realistic data samples.

- They leverage two neural networks: Generator and Discriminator.

- Goal of generator is to fool the discriminator into believing that the data generated is real.

- Goal of Discriminator is to tell the difference between real and fake data.

- The two neural networks train simultaneously. Generator works on improving the data generated so that its closer to real data and discriminator works on improvising its logic to detect fake data.

- Applications: Image synthesis, Style transfer, data augmentation

- They leverage two neural networks: Generator and Discriminator.

- Autoregressive Models: Used to create data sequentially using the context of previously generated elements. It captures the dependencies in the data by modeling each data point as function of previous one.

- Application: Text generation, image generation, audio synthesis

- Transformers: Mostly used for Natural Language processing (NLP) tasks.

- Consists of Encoders & Decoders layers to generate text sequences and translations.

- As the raw data is inputed, the data is converted into embedings and sequence order is added. Then the input is processed by multiple layers of encoder. Using the Ecoder’s output the decoder generates the output and then output tokens are predicted one by one.

With this we will meet soon! 🙂

For previous posts in this series:

Leave a Reply