Yes, I made it to week 2 (almost)! So, this week was about understanding the very basics of Neural Networks(NN). There will a part 2 on this topic soon.

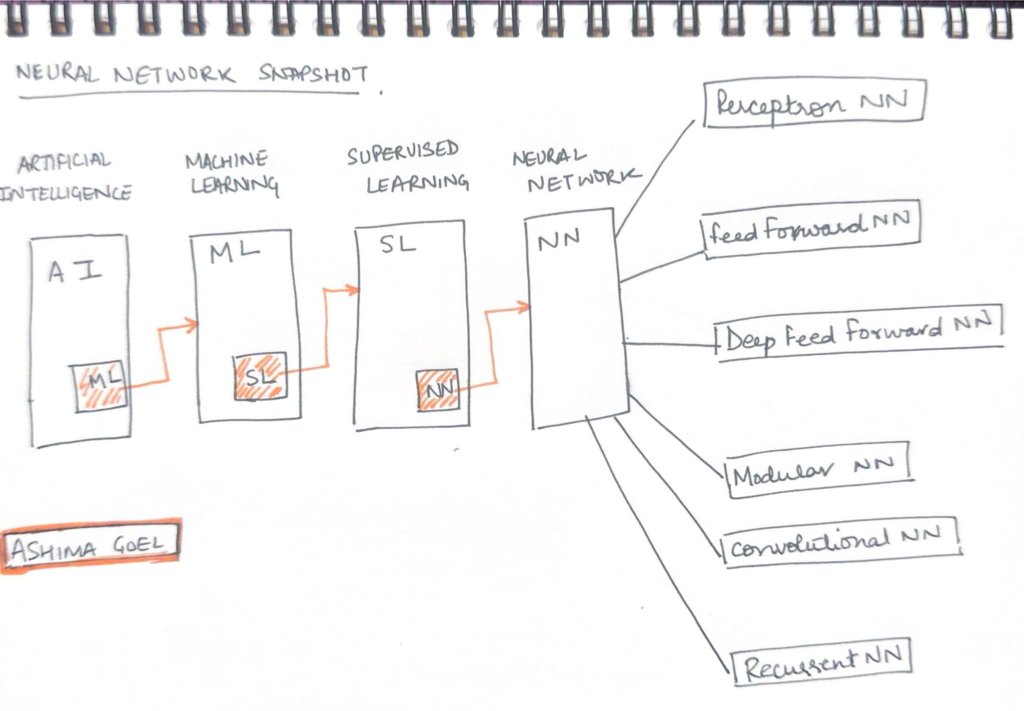

A quick refresher. Neural Network is a subset of Supervised Learning which is a subset of Machine Learning which is a subset of AI. And within Neural Network there are six types.

What are Neural Networks (NN)?

It is a subset of Supervised Learning and is inspired by the structure and function of human brain. Similar to ‘Neuron’ in brain, it has interconnected nodes (also referred as Neurons). These ‘neurons’ are organized in layers and designed to process information and learn patterns.

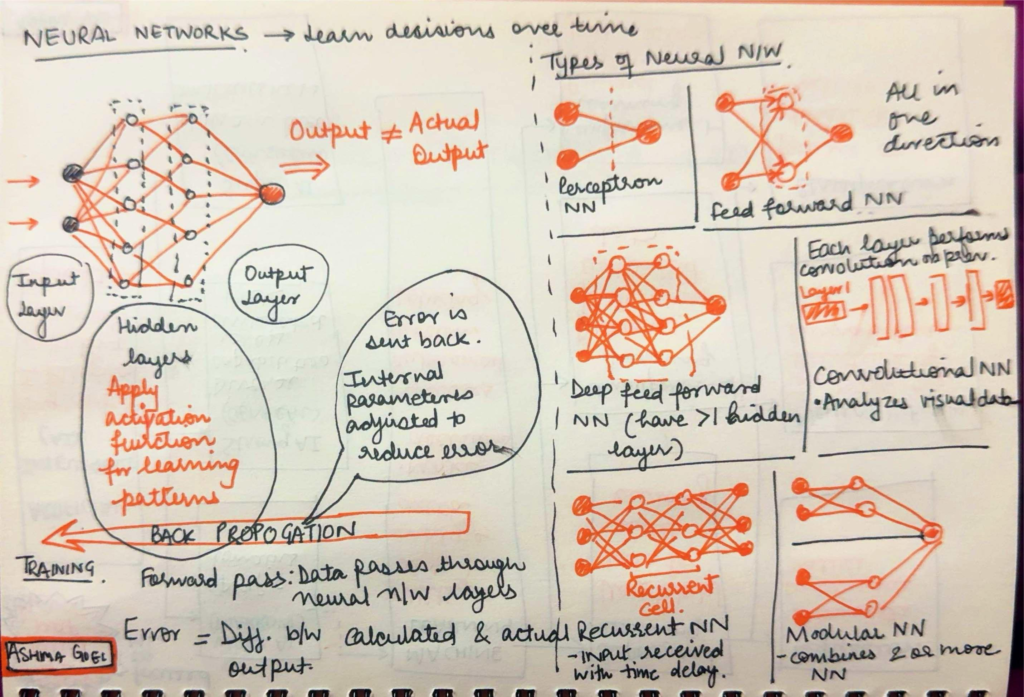

If we have to talk about the structure of a NN, its basically comprised of the following:

- Neurons: The very basic of units, each processing input and producing an output

- Connections: Links between the neurons, that determine how the information flows and each connection has different weights or strengths.

- Layers: It is mainly divided into three types:

- Input layer: Receives initial data

- Hidden Layers: Can be single or multiple hidden layers that process data through different stages while extracting complex features

- Output Layers: Produces the final result

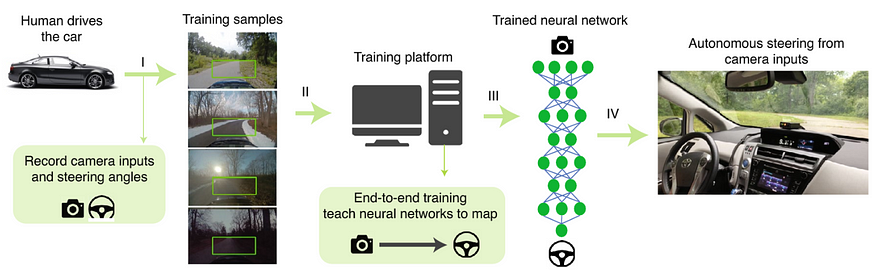

One very popular usage of NN in real life is in image recoginition, especially in Self-Driving cars. The camera of the self-driving car captures a stream of images while driving. This stream of images is the input to the NN model. The network is already trained to accurately identify objects in the images. The input of image stream is processed by the Neural Network and it analyses the image, identifies different objects in the image like red lights, other cars, pedestrians, etc. And as an output the network provides the information about the surroundings of the self-drive car!

How does the Neural Network learn?

So the Neural Networks go through the process of training. In other words, they train using the training set to learn more about the data and modify network accordingly to become better.

So, there is Feeding Data that is providing massive amount of data to the NN. The network will now consume this feeding data and work to adjust the connection weights so that the error is minimized. The error is nothing but the different between the network’s predictions and actual outcomes. This is done via Back Propagation.

If we think about it, this is how we as humans also learn.

Will keep it simple for this post and will dwell deeper in the next one. There is so much to talk on NN and its basics that one single post won’t do justice. 🙂

Leave a Reply